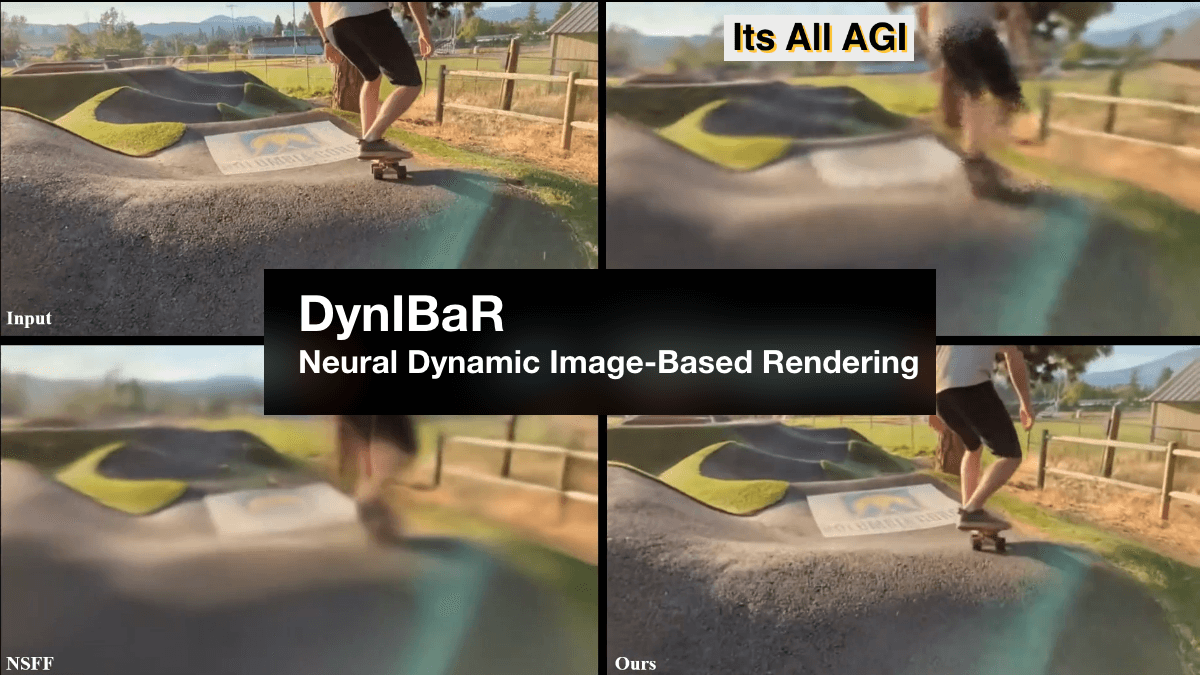

If you are interested in computer vision and 3D scene reconstruction, you might have heard of a recent paper that has been making waves in the research community: DynIBaR: Neural Dynamic Image-Based Rendering by Zhengqi Li et al. from Google Research and Cornell Tech. This paper, which will be presented at CVPR 2023, introduces a new method that can perform space-time view synthesis from a monocular dynamic video. In other words, it can take a regular 2D video clip and create a 3D version of it that can be viewed from any angle and time.

This is a remarkable achievement, as previous methods for novel view synthesis either required multiple cameras or specialized hardware, or could only handle static scenes or rigid objects. DynIBaR, on the other hand, can handle complex and non-rigid motions, such as people dancing, animals running, or water splashing. It can also produce high-quality renderings that preserve fine details and realistic lighting effects.

How does DynIBaR work?

The key idea is to use a neural network to learn a latent representation of the dynamic scene that encodes both its geometry and appearance. This representation is then used to synthesise novel views by warping and blending the input frames. The network is trained in an unsupervised manner, using only the input video and a set of target views as supervision.

DynIBaR Results

The results of DynIBaR are impressive and demonstrate its potential for various applications, such as video editing, virtual reality, augmented reality, and 3D photography. For example, DynIBaR can enable users to change the focus, animate the camera position, stabilize the video, create stereo-3D effects, and even relight the scene.

Conclusion

DynIBaR is an exciting step forward in the field of neural image-based rendering and opens up new possibilities for creating immersive and realistic 3D experiences from ordinary videos. If you want to learn more about DynIBaR, you can read the paper on arXiv or watch the presentation at CVPR 2023.