Have you ever wanted to bring your textual descriptions to life as captivating 3D models?

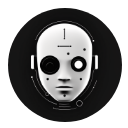

Well, imagine typing “a cute steampunk elephant” and witnessing a stunning 3D rendering of it materialize on your screen. It may sound like pure magic, but thanks to a groundbreaking paper by researchers from the International Digital Economy Academy (IDEA) and the University of Science and Technology of China, this seemingly fantastical concept is now a reality.

In this blog, we will delve into the remarkable method known as “DreamTime: An Improved Optimization Strategy for Text-to-3D Content Creation.” By seamlessly combining text-to-image diffusion models and neural radiance fields (NeRF), DreamTime generates realistic and diverse 3D content from text prompts.

Understanding Text-to-Image Diffusion Models and NeRF:

Text-to-image diffusion models, which are powerful deep neural networks, can synthesize images based on textual descriptions. Trained on vast datasets containing billions of image-text pairs sourced from the internet, such as the Laion5B dataset, these models employ a diffusion process to progressively convert random noise images into lifelike representations that align with the provided text.

On the other hand, neural radiance fields (NeRF) serve as a representation of 3D scenes. They encode color and density information for each point in space. By tracing rays through the scene and aggregating radiance along each ray, NeRF can generate high-quality 3D renderings. However, the reconstruction of a scene using NeRF typically requires a substantial number of input images.

The Inner Workings of DreamTime :

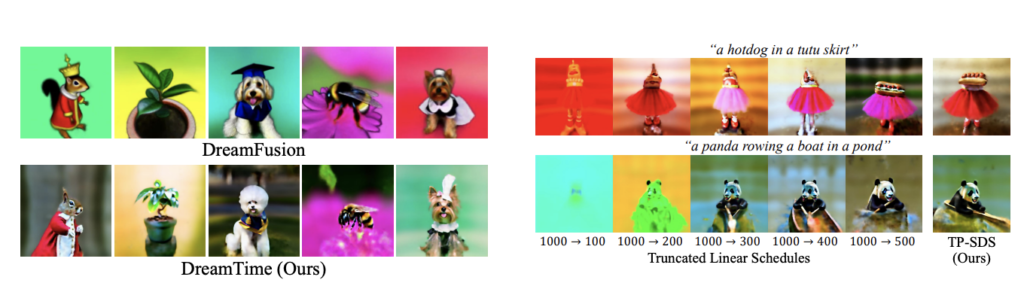

DreamTime harnesses the strengths of both text-to-image diffusion models and NeRF to produce 3D content from text. The core idea revolves around optimizing a NeRF, which is randomly initialized, with score distillation—an approach that transfers knowledge from a teacher model (the text-to-image diffusion model) to a student model (the NeRF).

The process of score distillation unfolds as follows:

- Firstly, given a text prompt, generate a set of target images using the text-to-image diffusion model at different timesteps during the diffusion process (ranging from 0, random noise, to 1, the final image).

- Next, initialize a NeRF with random weights and utilize it to render an image from a randomly chosen viewpoint.

- Then, compare the rendered image with the target images using a perceptual loss function that quantifies their similarity.

- Utilize gradient descent to update the NeRF weights, minimizing the perceptual loss.

- Finally, repeat steps 2-4 until convergence is achieved or a predetermined maximum number of iterations is reached.

The outcome is a NeRF capable of rendering realistic and diverse images of the 3D scene described by the text prompt from any desired viewpoint.

Advantages of DreamTime:

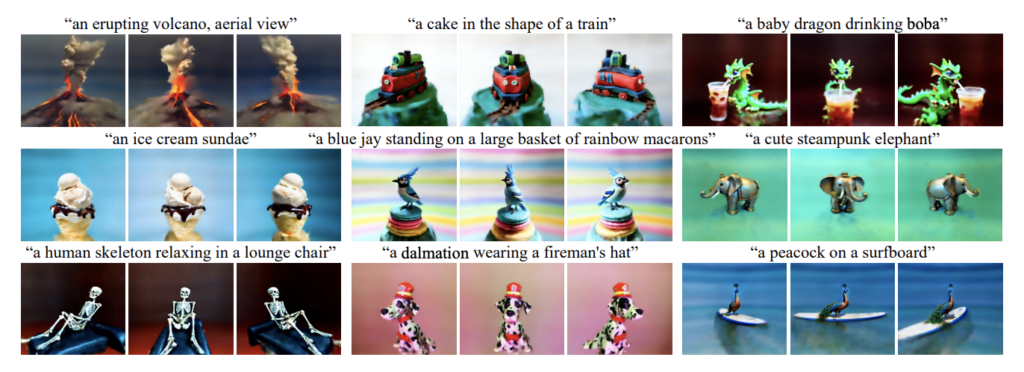

DreamTime surpasses previous methods for text-to-3D content creation in various aspects:

- Enhanced Quality and Diversity: DreamTime excels in producing higher quality and more diverse 3D models compared to existing methods utilizing adversarial loss or CLIP score as supervision. It effectively avoids common artifacts like saturated color and resolves the Janus problem, ensuring consistency between the front and back of an object.

- Handling Complexity: DreamTime can handle general and intricate text prompts encompassing diverse objects, scenes, and attributes. It possesses the ability to generate 3D content for any text prompt synthesizable by the text-to-image diffusion model, transcending limitations imposed by specific domains or datasets.

- Simplicity and Efficiency: Implementation and training of DreamTime are simple and efficient. The method solely requires a pre-trained text-to-image diffusion model and a randomly initialized NeRF, without necessitating additional data or network components. Furthermore, DreamTime exhibits faster convergence due to its improved optimization strategy.

Conclusion:

In conclusion, DreamTime presents an exciting breakthrough in the realm of text-to-3D content creation. By seamlessly combining text-to-image diffusion models and NeRF, DreamTime opens up new avenues for creative expression and applications in virtual reality. With the ability to generate realistic and diverse 3D models from any text prompt, DreamTime offers a world of possibilities.

Ultimately, this innovative method surpasses previous approaches in terms of quality, diversity, and handling complex prompts. Moreover, the simplicity and efficiency of DreamTime make it a practical solution for 3D content creation. In summary, DreamTime revolutionizes the way we bring textual descriptions to life, unlocking the magic of transforming words into captivating 3D creations.